Artificial intelligence has been reshaping different industries and faces criticism for displacing people from their jobs. At the same time, it is also important to identify the potential of artificial intelligence to create new career opportunities. One of the most notable career opportunities in the domain of AI is prompt engineering. Candidates with expertise in prompt engineering implementation steps can help businesses leverage the value of prompt engineering to improve their AI systems. Large language models, or LLMs, are the most powerful tools in the AI landscape for performing different tasks, such as translating languages and generating text.

However, LLMs may present issues of usability and can offer unpredictable results that are different from the expectations of users. Prompt engineering involves the creation of prompts that can extract the desired output from LLMs. Prompt engineering has gained prominence in the existing fast-paced business environment for enhancing the abilities of LLMs to streamline processes and boost productivity. However, most businesses are oblivious to the potential of prompt engineering techniques and how to implement them.

Prompt engineering contributes to productivity through analysis and redesign of prompts for catering to the specific requirements of individual users and teams. Let us learn more about prompt engineering, the important techniques for prompting, and best practices for implementation of prompt engineering.

Why Is Prompt Engineering Important Now?

The best way to understand the significance of prompt engineering must start with a definition of prompt engineering. Prompt engineering is the technique used for Natural Language Processing or NLP for optimizing the performance and outputs of language models, such as ChatGPT.

The answers to “How to implement prompt engineering?” draw the limelight on structuring the text inputs for generative AI in a way that helps LLMs understand and interpret the query. When the LLM understands the query effectively, it can generate the expected output. Prompt engineering also involves fine-tuning large language models alongside optimizing the flow of conversation with LLMs.

You can understand the importance of prompt engineering by its capability to enable in-context learning with large language models. Prior to LLMs, AI and NLP systems could address only a few tasks, such as identification of objects and classification of network traffic. However, AI systems did not have the capability to take a few examples of input data and perform expected tasks.

The implementation of prompt engineering can help in leveraging the ability of LLMs to perform in-context learning. It helps in designing prompts with some examples of queries and the desired output. As a result, the model could improve the quality of performance for the concerned task.

In-context learning is a crucial feature due to its similarities to the learning approaches of humans. Repetitive practice can help a model in learning new skills instantly. With the help of in-context learning through prompt engineering, you can structure the output of a model and output style. Prompt engineering also presents many other advantages for LLM applications in businesses.

Dive into the world of prompt engineering and become a master of generative AI applications with the Prompt Engineer Career Path.

What are the Value Advantages of Prompt Engineering?

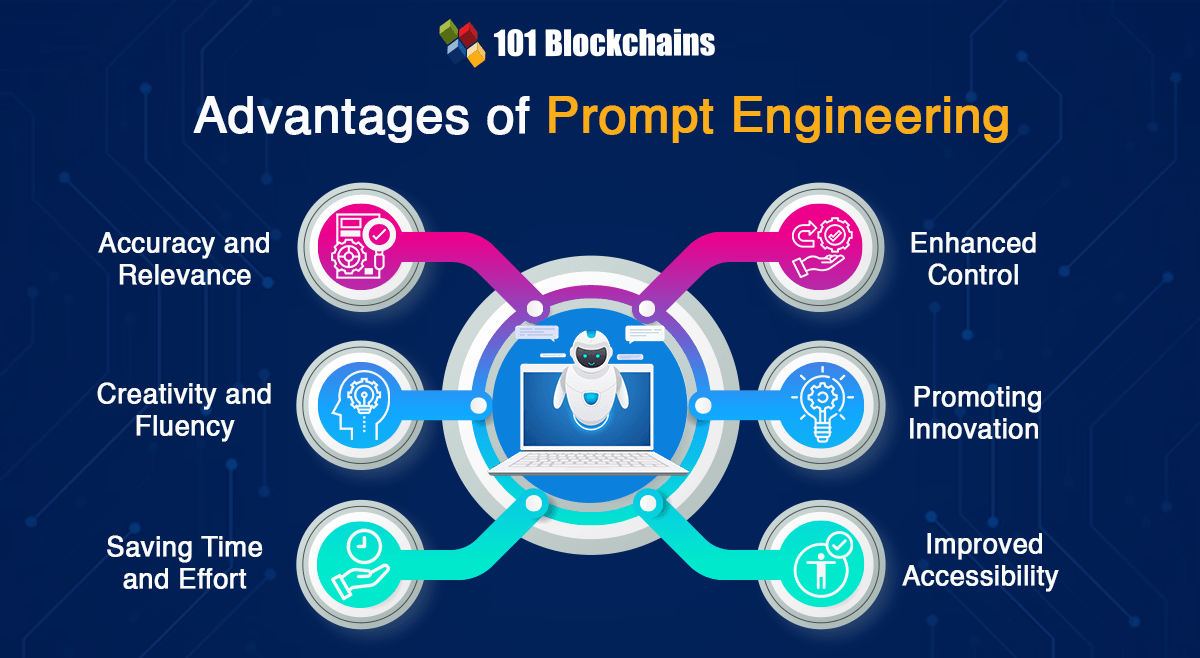

The best practices to implement prompt engineering in business applications also involve an in-depth understanding of the value advantages of prompting. Prompt engineering can help improve large language models with the following value benefits.

Careful design of the prompts can help LLMs find the required information for generating the desired outputs. As a result, it could ensure reduction of errors and ensure relevance of the output to the intent of users.

The effective use of prompt engineering in business operations involves providing specific instructions to LLMs. As a result, the model can produce creative outputs with improved fluency, thereby improving the effectiveness of language translation and content generation.

Well-designed prompts can help large language models in learning efficiently at a faster rate. It can help improve the overall performance of the model while saving time and resources.

With a detailed understanding of prompt engineering implementation steps, users in the domain of business can exercise more control over the output of LLMs. For example, users can specify the desired format, tone, and style of the output by the model. It can help businesses with tasks such as generating creative text and marketing copies.

Prompt engineering can also serve crucial value advantages to businesses by encouraging innovation. Developers and researchers can use LLMs to discover creative ways to resolve issues with innovative approaches by identifying new possibilities of prompt engineering.

Improvement in Accessibility

The effective implementation of prompt engineering can help ensure that a large language model is accessible to more users. Users who don’t have any experience in using AI can rely on easy-to-understand and simple prompts for generating high-quality output.

Identify the full potential of generative AI in business use cases and become an expert in generative AI technologies with the Generative AI Skill Path.

What are the Techniques for Prompt Engineering?

The review of common prompt engineering techniques would help you identify some notable mentions such as zero-shot prompting, one-shot prompting, and chain-of-thought prompting. However, in-context learning and prompt development are not the only techniques involved in the domain of prompt engineering. If you want to implement effective prompts for business applications, then you must know about concepts such as fine-tuning, pre-training, and embedding. Here is an overview of these techniques and their importance for prompt engineering.

The best practices for implementing prompts tailored to business use cases emphasize model pre-training as one of the crucial priorities. Pre-training helps the language model in understanding the semantics and structure of natural language. Generative AI models are trained with massive volumes of training data, extracted through scraping content from different books, snippets of code from GitHub, content from the internet, and Wikipedia pages.

It is important to remember that pre-training is a time-intensive and expensive process that requires technical expertise. The applications of prompt engineering in business can leverage the benefits of pre-training AI models with data related to the company. However, retraining the complete model from scratch when you introduce new products or updates in the knowledge base can be an expensive affair. In such cases, you can rely on embeddings.

Take your first step towards learning about artificial intelligence through AI Flashcards

Semantic embedding in prompt engineering can help prompt engineers in feeding a small dataset of domain knowledge to a large language model. The general knowledge of LLMs such as GPT-3 or GPT-4 is massive. However, it can offer responses with AI hallucinations about code examples of a new Python library or details of a new tool you are working on.

The practices to implement prompt engineering in business draw the limelight on the capability of embedding for feeding new data to the pre-trained LLM. As a result, you can achieve improved performance for particular tasks. At the same time, it is also important to note that embedding is a complicated and costly task. Therefore, you should go for embedding only when you want the model to acquire specific knowledge or feature certain behavioral traits.

Fine-tuning is an important tool for helping developers in adjusting the functionality of LLMs. It can serve as a crucial tool for scenarios involving changes in style, format, tone, and different qualitative aspects of the outputs. As a result, it can improve the chances of generating desired results with better quality.

The decision to fine-tune LLM models to suit specific applications should account for the resources and time required for the process. It is not a recommended process when you have to adapt the model for performing specific tasks.

The effective implementation of prompt engineering involves more than an in-depth understanding of prompting techniques. You must also have an in-depth understanding of the internal working mechanisms and limitations of Large Language Models. In addition, you must also know when and how to use in-context learning, fine-tuning, and embedding to maximize the value of LLMs in business operations.

Master the concepts of ChatGPT to boost your skills, improve your productivity, and uncover new opportunities with our ChatGPT Fundamental Course.

Steps for Implementing Prompt Engineering in Business Operations

The most effective approach for using prompt engineering involves following a step-by-step approach. You can rely on the following high-level framework for creating effective use cases of prompt engineering for your business.

The responses for “How to implement prompt engineering?” should begin with a clear impression of the goals for the prompts. First of all, you must ask yourself what you want to achieve with the prompts. The goals of the prompt for businesses could include creation of website content, analysis of online reviews, or development of sales scripts. Effective identification of the goals for prompt engineering can help in defining the direction of the prompt. It is also important to identify the context, constraints, and specific tasks associated with the prompts.

Some of the notable examples of defining goals can include creation of product descriptions, campaign brainstorming, and generation of creative social media posts. For example, you can define a prompt for creating descriptions of a new line of products with a specific theme.

Create the Prompt Elements with Precision

The next addition to prompt engineering implementation steps revolves around inclusion of the important prompt elements. You should define the essential elements for your prompts, such as role, context, tasks, examples, and constraints. Development of the right user persona ensures that the LLMs can produce outputs that can align with the expectations of the audience.

You should also add contextual information by identifying the core facets of the business that align with your target audience. Some of the crucial aspects that can help in designing prompts include a business overview, target audience, community engagement, and brand tone.

You can also ensure better results with use cases of prompt engineering in business by providing examples and listing out the constraints. With these elements, you can find effective ways to improve the quality of responses through the prompts.

The best practices for effective, prompt engineering to support business use cases also draw attention towards quality assurance. How can you ensure that you have generated high-quality prompts? The ideal answer for such questions is a credible, prompt testing and iteration process.

It is important to implement prompt engineering in business by emphasizing the optimal balance between flexibility and detail. The effectiveness of a prompt depends on its usability in different scenarios. Continuous iteration of the prompts could also help in improving the outputs of prompt engineering processes.

Want to understand the importance of ethics in AI, ethical frameworks, principles, and challenges? Enroll now in the Ethics Of Artificial Intelligence (AI) Course

What are the Challenges for Prompt Engineering?

Anyone interested in implementation of prompt engineering should also learn about the limitations of prompting. The critical challenges for prompt engineering include ethical considerations, prompt injection, ambiguous prompts, management of complex prompts, interpretation of model responses, and bias mitigation.

Ambiguous prompts can create problems for generating concise responses and could lead to AI hallucinations. A lack of ethical considerations for prompt design can also lead to negative outcomes from LLMs, such as unethical content, misinformation, or fake news. Another notable problem with utilizing prompt engineering in business use cases points to the risks of bias and fairness. It is important to ensure that your prompts create inclusive AI systems that respect and understand all types of users.

Another prominent challenge for creation of effective prompts to support business operations is prompt injection. It is a major vulnerability for generative AI, alongside other risks. Therefore, it is important to identify the best tools and preventive measures for ensuring safety from prompt injections. The length of a prompt could also present a crucial challenge for prompt engineers as the length can increase complexity of the prompts. You should maintain a balance between the length and complexity of the prompt to avoid the higher maintenance costs of prompts.

Learn about the fundamentals of Bard AI, its evolution, common tools, and business use cases with our Google Bard AI Course.

Conclusion

The popularity of generative AI technology will increase continuously in the future with expansion of their capabilities. Aspiring prompt engineers must look for the best practices to implement prompt engineering in business and achieve better results. In the long run, prompt engineering will become an essential requirement for optimizing AI systems to achieve desired objectives for businesses.

Prompt engineering is still in the initial stages and would take some time to evolve with new tools emerging every day. Growing adoption of generative AI systems and discovery of the power of LLMs have been drawing more attention toward prompt engineering. Learn more about the best practices for prompt engineering for business use cases right now.